Having been involved in a scour of a customer website for the past few weeks we have become keenly interested in the Screaming Frog SEO software and its features and capabilities. It is a Java application that - as a result of having been based on the Java framework - is capable of using huge resources on a x64 architecture based system. The website we were examining was composed of some 570,000 internal URIs and as a result was taking a lot of time to analyse and then examine.

The slightly surprising part of the experience (although perhaps not so much later after considering how the system must be working) was that the analysis of the website (which was performed on a system with 16GB set aside for Java on a 32GB machine and a 55MB dedicated fibre internet connection) finished analysis within a couple of days. The analysis resulted in a crawl file of 5.7 GB which we then needed to export in various ways for different analysis in Excel. Loading this file however (on the same 16GB set aside for Java machine) was still only 54% in after two weeks.

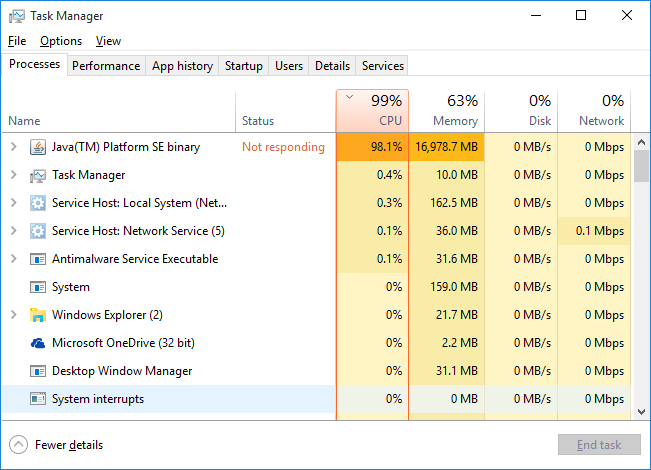

We then tried loading the same crawl file on a Dell R730Xd with 256GB with 200GB set aside for Java and loaded the file within a few hours. The machine with only 32GB seemed to struggle mostly with CPU - the performance stats registered over 90% for about two weeks.

So when we started the same process on the machine that had similar processing power but much more RAM it became obvious that the lack of RAM was causing the CPUs to do exponentially more work. As soon as we altered the RAM for Java on the 32GB box to 28GB we saw a sharp fall in the processing power the application requested as well as a sharp fall in the amount of time loading the crawl files took.

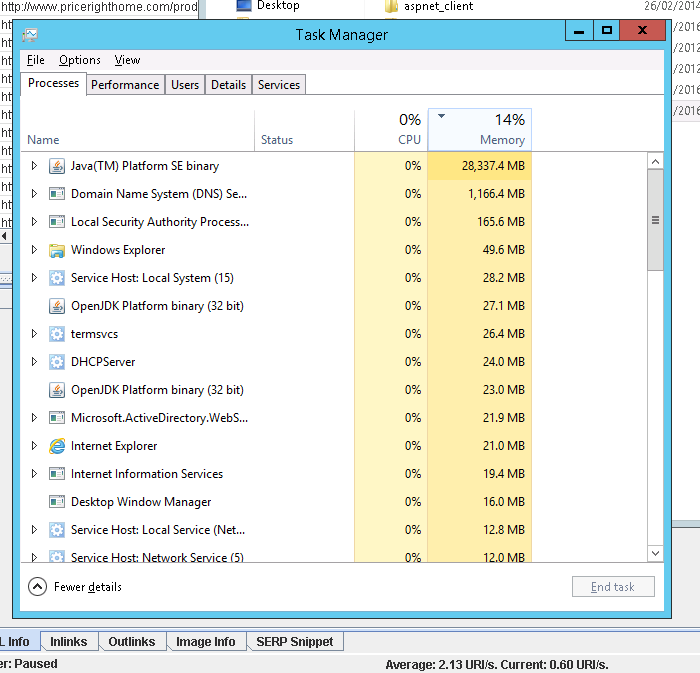

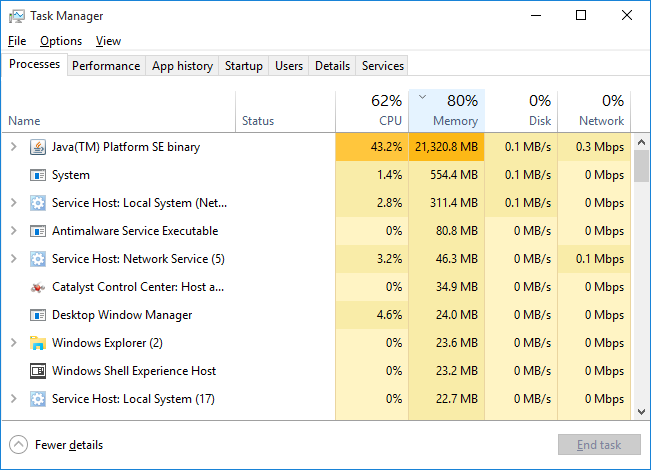

On the server the application took more RAM without question and the Java app kept the RAM allocated as long as the file was loaded:

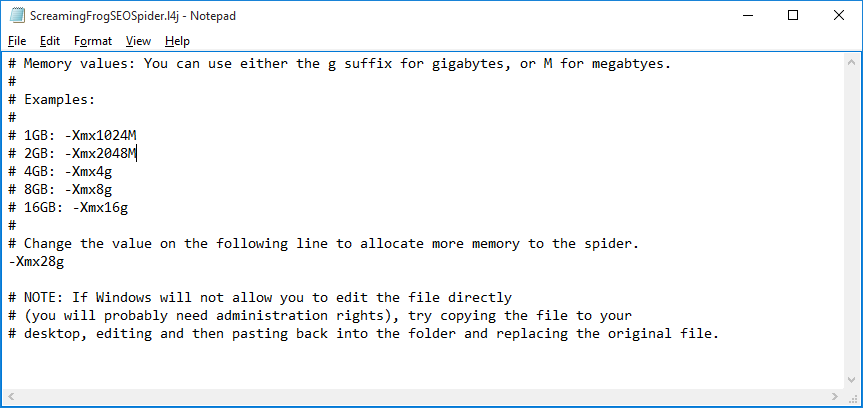

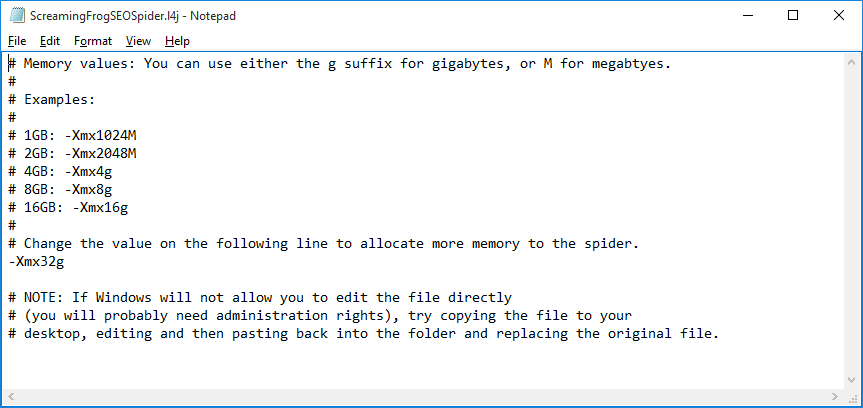

And so on the 32GB machine we upped the RAM set-aside for the Java:

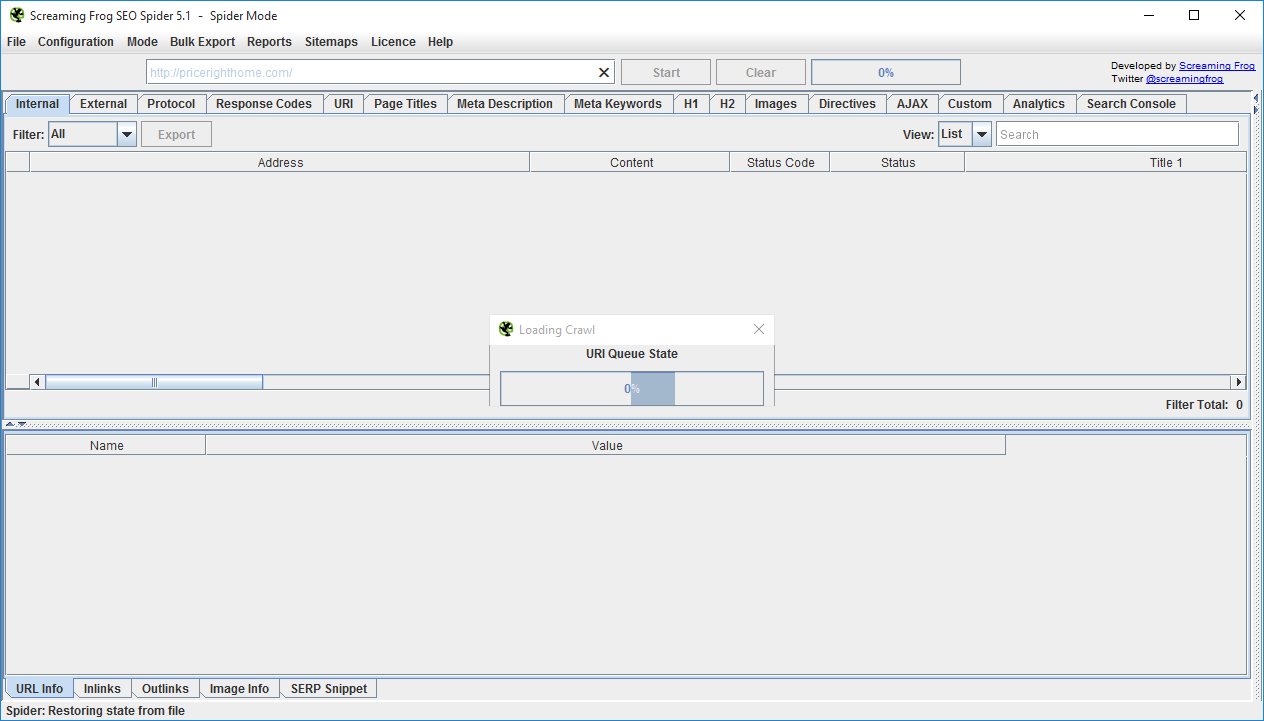

And we now see that the 32GB machine (although only able to access 30GB in this case) now uses that bit extra RAM that allow Screaming Frog SEO to load the crawl file:

The moral of the story? When you are running this - or for that matter any Java app that is loading large data - then RAM is the most important factor as it stops the CPUs doing all the work. We found that in most cases the RAM starved situations we tried running with Screaming Frog SEO never finished and just cooked the CPUs for days.